The TinyRadarNN datasets are a collection or RADAR data of hand gestures, collected Integrated Systems Lab at ETH Zurich using the Acconeer XR111 sensor. [1]

This project is brought to you by Moritz Scherer, Michele Magno, Jonas Erb, Philipp Mayer, Manuel Eggimann, Luca Benini of Digital Circuits and Systems Group of ETH Zurich (https://iis.ee.ethz.ch/), with the support of the Project Based Learning Center (https://pbl.ee.ethz.ch/).

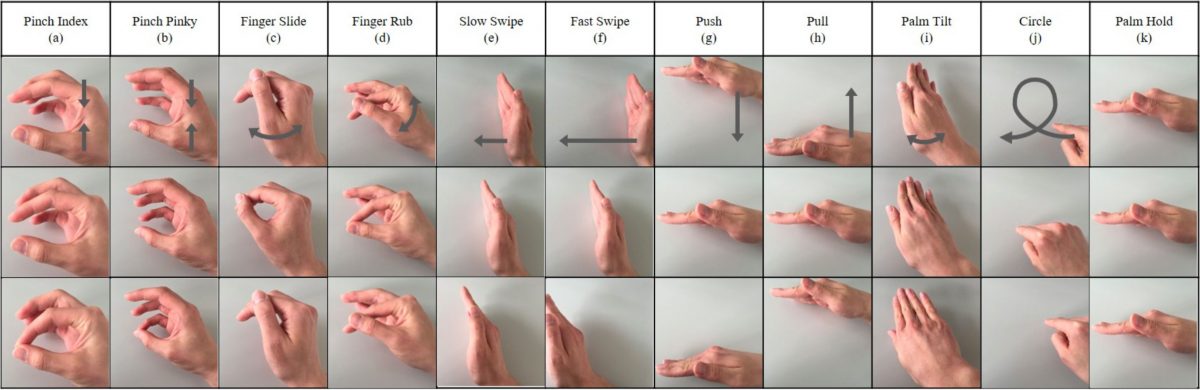

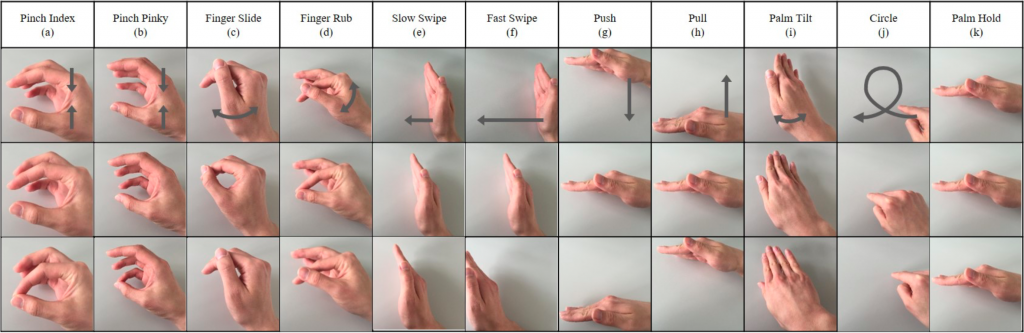

The datasets are divided into one dataset with 5 gesture classes and one dataset with 11 gesture classes.

The dataset parameters are shown in the table below.

| Parameters | 5G | 11G (SU) | 11G (MU) |

| Sweep frequency | 256 Hz | 160 Hz | 160 Hz |

| Sensors | 1 | 2 | 2 |

| Recording length | 3s | 3s | 3s |

| # of different people | 1 | 1 | 26 |

| Instances per session | 50 | 7 | 7 |

| Sessions per recording | 10 | 5 | 5 |

| Recordings | 1 | 20 | 26 |

| Instances per gesture | 500 | 710 | 910 |

| Instances per person | 2500 | 7700 | 35 |

| Total instances | 2500 | 7700 | 10010 |

| Sweep ranges | 10-30 cm | 7-30 cm | 7-30 cm |

| Sensor modules used | XR111 | XR112 | XR112 |

License

The dataset is distributed under the Creative Commons Attribution Non-Commercial 4.0 (CC-BY-NC) license. All code is distributed under the Apache-2.0 license.

If you find this dataset useful in your research, please cite it with the citation below. You can find the associated paper on arxiv.org:

Citation:

@misc{scherer2020tinyradarnn,

title={TinyRadarNN: Combining Spatial and Temporal Convolutional Neural Networks for Embedded Gesture Recognition with Short Range Radars},

author={Moritz Scherer and Michele Magno and Jonas Erb and Philipp Mayer and Manuel Eggimann and Luca Benini},

year={2020},

eprint={2006.16281},

archivePrefix={arXiv},

primaryClass={eess.SP}

Contact:

For questions, please contact Moritz Scherer: scheremo@iis.ee.ethz.ch